Web Audio is specified in the W3C Web Audio Specification, and continues to evolve under the guidance of the W3C Audio Working Group. This release adds Microsoft Edge to the list of browsers that support Web Audio today, and establishes the specification as a broadly supported, and substantially interoperable web spec.

Web Audio Capabilities

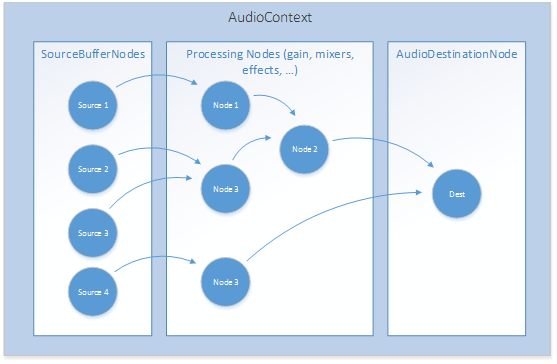

Web Audio is based on concepts that are key to managing and a playing multiple sound sources together. These start with the AudioContext and a variety of Web Audio nodes. The AudioContext defines the audio workspace, and the nodes implement a range of audio processing capabilities. The variety of nodes available, and the ability to connect them in a custom manner in the AudioContext makes Web Audio highly flexible.

SourceBufferNodes are typically used to hold small audio fragments. These get connected to different processing nodes, and eventually to the AudioDestinationNode, which sends the output stream to the audio stack to play through speakers.

In concept, the AudioContext is very similar to the audio graph implemented in XAudio2 to support gaming audio in Classic Windows Applications. Both can connect audio sources through gain and mixing nodes to play sounds with accurate time synchronization.

Web Audio goes further, and includes a range of audio effects that are supported through a variety of processing nodes. These include effects that can pan audio across the sound stage (PannerNode), precisely delay individual audio streams (DelayNode), and recreate sound listening environments, like stadiums or concert halls (ConvolverNode). There are oscillators to generate random signals (OscillatorNode) and many more.

Microsoft Edge supports all of the following Web Audio interfaces and node types:

- The AudioContext Interface

- The OfflineAudioContext Interface

- The AudioNode Interface

- The AudioDestinationNode Interface

- The AudioParam Interface

- The GainNode Interface

- The DelayNode Interface

- The AudioBuffer Interface

- The AudioBufferSourceNode Interface

- The ScriptProcessorNode Interface

- The AudioProcessingEvent Interface

- The PannerNode Interface

- The AudioListener Interface

- The StereoPannerNode Interface

- The ConvolverNode Interface

- The AnalyserNode Interface

- The ChannelSplitterNode Interface

- The ChannelMergerNode Interface

- The DynamicsCompressorNode Interface

- The BiquadFilterNode Interface

- The WaveShaperNode Interface

- The OscillatorNode Interface

- The PeriodicWave Interface

Special Media Sources

There are two other node types that Microsoft Edge implements that apply to specific use cases. These are:

Media Capture integration with Web Audio

The MediaStreamAudioSourceNode accepts stream input from the Media Capture and Streams API, also known as getUserMedia after one of the primary stream capture interfaces. A recent Microsoft Edge Dev Blog post announced our support for media capture in Microsoft Edge. As part of this implementation, streams are connected to Web Audio via the MediaStreamAudioSourceNode. Web apps can create a stream source in the audio context and include stream captured audio from, for example, the system microphone. Privacy precautions are considered by the specification and discussed in our previous blog. The positive is that audio streams can be processed in web audio for gaming, music or RTC uses.

Gapless Looping in Audio Elements

The MediaElementAudioSourceNode similarly allows an Audio Element to be connected through Web Audio as well. This is useful for playing background music or other long form audio that the app doesn’t want to keep entirely in memory.

We’ve both connected Audio & Video Elements to Web Audio, and also made performance changes that allow audio to loop in both with no audio gap. This allows samples to be looped continuously.

Web Audio Demo

We’ve published a demo to illustrate some of Web Audio’s capabilities using stream capture with getUserMedia. The Microphone Streaming & Web Audio Demo allows local audio to be recorded and played. Audio is passed through Web Audio nodes that visualize the audio signals, and apply effects and filters.

The following gives a short walkthrough of the demo implementation.

Create the AudioContext

Setting up the audio context and the audio graph is done with some basic JavaScript. Simply create nodes that you need (in this case, source, gain, filter, convolver and analyzer nodes), and connect them from one to the next.

Setting up the audioContext is simple:

var audioContext = new (window.AudioContext || window.webkitAudioContext)();

Connect the Audio Nodes

Additional nodes get created by calling node specific create methods on audioContext:

var micGain = audioContext.createGain();

var sourceMix = audioContext.createGain();

var visualizerInput = audioContext.createGain();

var outputGain = audioContext.createGain();

var dynComp = audioContext.createDynamicsCompressor();

Connect the Streaming Source

The streaming source is just as simple to create:

sourceMic = audioContext.createMediaStreamSource(stream);

Nodes are connected from source to processing nodes to the destination node with simple connect calls:

sourceMic.connect(notchFilter);

notchFilter.connect(micGain);

micGain.connect(sourceMix);

sourceAudio.connect(sourceMix);

Mute and Unmute

The mic and speakers have mute controls to manage situations where audio feedback happens. They are implemented by toggling the gain on nodes at the stream source and just before the AudioDestinationNode:

var toggleGainState = function(elementId, elementClass, outputElement){

var ele = document.getElementById(elementId);

return function(){

if (outputElement.gain.value === 0) {

outputElement.gain.value = 1;

ele.classList.remove(elementClass);

} else {

outputElement.gain.value = 0;

ele.classList.add(elementClass);

}

};

};

var toggleSpeakerMute = toggleGainState('speakerMute', ‘button--selected', outputGain);

var toggleMicMute = toggleGainState('micMute', ‘button--selected', micGain);

Apply Room Effects

Room effects are applied by loading impulse response files into a convolverNode connected in the stream path:

var effects = {

none: {

file: 'sounds/impulse-response/trigroom.wav'

},

telephone: {

file: 'sounds/impulse-response/telephone.wav'

},

garage: {

file: 'sounds/impulse-response/parkinggarage.wav'

},

muffler: {

file: 'sounds/impulse-response/muffler.wav'

}

};

var applyEffect = function() {

var effectName = document.getElementById('effectmic-controls').value;

var selectedEffect = effects[effectName];

var effectFile = selectedEffect.file;

Note that we took a small liberty in using the “trigroom” environment as a surrogate for no environmental effect being applied. No offense is intended for fans of trigonometry!

Visualize the Audio Signal

Visualizations were implemented by configuring analyzerNodes for time and frequency domain data, and using the results to manipulate canvas based presentations.

var drawTime = function() {

requestAnimationFrame(drawTime);

timeAnalyser.getByteTimeDomainData(timeDataArray);

var drawFreq = function() {

requestAnimationFrame(drawFreq);

freqAnalyser.getByteFrequencyData(freqDataArray);

Record & Play

Recorder features use the recorder.js open source sample written by Matt Diamond, and used previously in other Web Audio based recorder demos. Live audio in the demo uses the MediaStreamAudioSource, but recorded audio is played using the MediaElementAudioSource. Gapless looping can be tested by activating the loop control during playback.

The complete code for the demo is available for your evaluation and use on GitHub.

Conclusion

There are many articles and other demos available on the web that illustrate Web Audio’s many capabilities, and provide other examples that can be run on Microsoft Edge. You can try some out on our Test Drive page at Microsoft Edge Dev:

- Flight Arcade: WebGL graphics and Web Audio sound

- Web Audio Tuner: Chromatic tuner using Web Audio

- Music Lounge: More WebGL with Web Audio

We’re eager for your feedback so we can further improve our Web Audio implementation, and meanwhile we are looking forward to seeing what you do with these new features!

– Jerry Smith, Senior Program Manager, Microsoft Edge