Ensuring high-quality browser accessibility with automation

Testing the accessibility implementation of a browser on Windows used to require downloading the tools for the browser you wanted to test and then manually checking each of the requirements of a test suite. To help reduce this overhead, and to ensure we catch regressions before they ship, we created a tool to automate the HTML 5 Accessibility test suite .

What is necessary to automate accessibility

In order to fully test accessibility, you need to not only test the platform to ensure your code is working as expected, but also the bridges between the UIA client and the UIA provider. In order to accomplish this, we built a custom UIA client to iterate over the tests of HTML 5 Accessibility by requesting nodes from the accessibility tree via web driver automation.

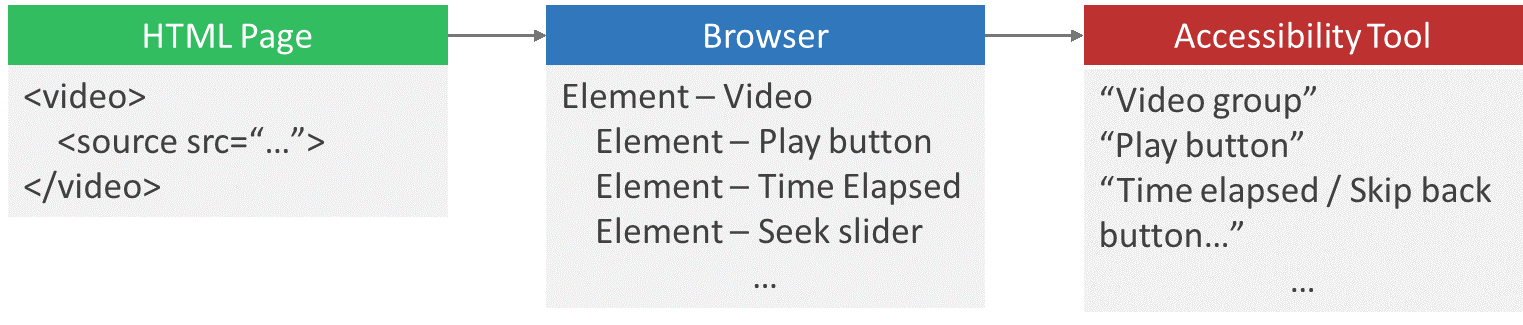

The figure below shows the accessibility pipeline from the page source to the user. An HTML element, such as the video element, is converted by Microsoft Edge or another browser into several UI Automation Elements. The video element is the parent of the other elements, which represent the controls, such as the play button or volume slider. Then an assistive technology, such as Narrator, communicates those elements in a way the user can understand, for example by speaking their names aloud. Our automation took the place of the accessibility tool to verify that the rest of the pipeline was working correctly.

Automating the HTML 5 Accessibility tests

Automating these tests requires two types of access to the page. First, we need to be able to simulate user input, such as key presses, which we do through WebDriver. Second, we need to verify that the content of the page is accessible to users of all abilities, which we do through accessibility APIs, such as UI Automation.

It’s important to understand that the DOM tree and the accessibility tree have two different sets of elements, which don’t always have a one-to-one relationship. For example, the video element might not have any children in the DOM tree, but will have several children in the accessibility tree.

First, we walk the accessibility tree to ensure that the element can be found on the page and that it has the right control type. Next, we use WebDriver to tab through all the keyboard-accessible elements. This is important because the web is often a difficult place for keyboard-only users we want them to be able to navigate all sites and access all elements.

Accessibility on Windows goes beyond Microsoft Edge

The work we’ve done has helped us improve accessibility in Microsoft Edge, but we want all users on Windows to have a remarkable accessibility experience, no matter what browser they’re using. To accomplish this, web developers need to ensure their sites are accessible and other browser vendors need to ensure their browsers meet accessibility requirements. To help the community in this endeavor, we are open sourcing our HTML 5 Accessibility test harness code on Github with a MIT license.

As a part of this project, we’ve included a sample branch to help web developers begin to automate accessibility testing of their own sites on browsers that utilize UI Automation clients. You can use it on your site by specifying the URLs you want to test and what needs to be tested on each site. You can learn more about how to test your own site by looking at the site automation readme.

We hope this can be useful to help make your products work for everyone, and we look forward to feedback and contributions from the community!

- David Brett, Software Engineer

- Greg Whitworth, Program Manager