In this article, we’re going to dig further into some of the options for speech recognition with SpeechRecognizer. First, however, we’re going to take a detour and look at Speech synthesis via the recognizer’s friend, the SpeechSynthesizer.

Speech Synthesis in ‘a few lines of code’

Let’s start by writing a function that performs synthesis (i.e. takes text and outputs speech):

[code language=”csharp”]

async Task<IRandomAccessStream> SynthesizeTextToSpeechAsync(string text)

{

// Windows.Storage.Streams.IRandomAccessStream

IRandomAccessStream stream = null;

// Windows.Media.SpeechSynthesis.SpeechSynthesizer

using (SpeechSynthesizer synthesizer = new SpeechSynthesizer())

{

// Windows.Media.SpeechSynthesis.SpeechSynthesisStream

stream = await synthesizer.SynthesizeTextToStreamAsync(text);

}

return (stream);

}

[/code]

This is short and sweet, but it’s not quite the inverse of the functionality that we saw in the previous article with SpeechRecognizer, which actively listened to a microphone for speech. The SpeechSynthesizer does not emit audio via a speaker or headphones.

If we call this function with a string like “Hello World,” then we get an audio stream returned that we then need to play through a means such as XAML MediaElement. The method below builds on the previous one to add that extra piece of functionality:

[code language=”csharp”]

async Task SpeakTextAsync(string text, MediaElement mediaElement)

{

IRandomAccessStream stream = await this.SynthesizeTextToSpeechAsync(text);

await mediaElement.PlayStreamAsync(stream, true);

}

[/code]

If we have a MediaElement defined in a XAML UI and named ‘uiMediaElement’ then we could call this new method with a snippet like this:

[code language=”csharp”]

async void Button_Click(object sender, RoutedEventArgs e)

{

await this.SpeakTextAsync("Hello World", this.uiMediaElement);

}

[/code]

The sharp-eyed amongst you may know that there previously was no MediaElement.PlayStreamAsync method, so we added one as an extension to tidy up the code. Here’s a possible implementation of that extension method:

[code language=”csharp”]

static class MediaElementExtensions

{

public static async Task PlayStreamAsync(

this MediaElement mediaElement,

IRandomAccessStream stream,

bool disposeStream = true)

{

// bool is irrelevant here, just using this to flag task completion.

TaskCompletionSource<bool> taskCompleted = new TaskCompletionSource<bool>();

// Note that the MediaElement needs to be in the UI tree for events

// like MediaEnded to fire.

RoutedEventHandler endOfPlayHandler = (s, e) =>

{

if (disposeStream)

{

stream.Dispose();

}

taskCompleted.SetResult(true);

};

mediaElement.MediaEnded += endOfPlayHandler;

mediaElement.SetSource(stream, string.Empty);

mediaElement.Play();

await taskCompleted.Task;

mediaElement.MediaEnded -= endOfPlayHandler;

}

}

[/code]

Now we’re talking (literally!) and we can vary the output here by manipulating properties on the MediaElement such as PlaybackRate. But there are other and perhaps better ways of affecting the voice being used here.

Where is the voice coming from?

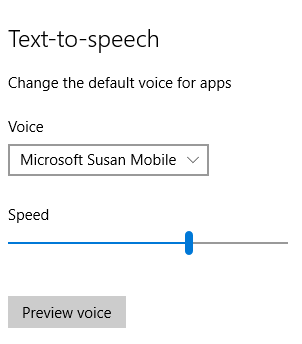

The voice that’s being used for synthesis by the previous code snippet will be the default one set in the control panel for the system. For example, on a PC the setting looks like this:

This choice of voice is reflected in the SpeechSynthesizer.DefaultVoice property but can be changed by making use of the SpeechSynthesizer.AllVoices property to find and use other voices, as in the snippet below:

[code language=”csharp”]

// Windows.Media.SpeechSynthesis.SpeechSynthesizer

using (SpeechSynthesizer synthesizer = new SpeechSynthesizer())

{

// Our previous example effectively used SpeechSynthesizer.DefaultVoice

// Here we choose the first female voice on the system or we fallback

// to the default voice again.

VoiceInformation voiceInfo =

(

from voice in SpeechSynthesizer.AllVoices

where voice.Gender == VoiceGender.Female

select voice

).FirstOrDefault() ?? SpeechSynthesizer.DefaultVoice;

synthesizer.Voice = voiceInfo;

// Windows.Media.SpeechSynthesis.SpeechSynthesisStream

stream = await synthesizer.SynthesizeTextToStreamAsync(text);

}

[/code]

This code changes the voice used for synthesis to the first female voice that it finds on the system, and your own app can similarly offer the user a choice for which voice they’d like to hear.

Taking more control of speech delivery

There’s more that an app can do to control how speech is delivered to the user via the SpeechSynthesizer.SynthesizeSsmlToStreamAsync method.

SSML is Speech Synthesis Markup Language and it’s an XML grammar that can be used to control many aspects of speech generation, including volume, pronunciation, and pitch. The complete specification is on the W3C site. It’s fairly involved and perhaps more for specialized scenarios, but it’s relatively easy to write a similar method that synthesizes SSML from any file:

[code language=”csharp”]

async Task<IRandomAccessStream> SynthesizeSsmlToSpeechAsync(StorageFile ssmlFile)

{

// Windows.Storage.Streams.IRandomAccessStream

IRandomAccessStream stream = null;

// Windows.Media.SpeechSynthesis.SpeechSynthesizer

using (SpeechSynthesizer synthesizer = new SpeechSynthesizer())

{

// Windows.Media.SpeechSynthesis.SpeechSynthesisStream

string text = await FileIO.ReadTextAsync(ssmlFile);

stream = await synthesizer.SynthesizeSsmlToStreamAsync(text);

}

return (stream);

}

[/code]

We can then layer a second method that plays the speech through a MediaElement, as we did for the plain text synthesis example:

[code language=”csharp”]

async Task SpeakSsmlFileAsync(StorageFile ssmlFile, MediaElement mediaElement)

{

IRandomAccessStream stream = await this.SynthesizeSsmlToSpeechAsync(ssmlFile);

await mediaElement.PlayStreamAsync(stream, true);

}

[/code]

If we embed a file named speech.xml into our project and have a MediaElement named uiMediaElement in our UI, then we can invoke this function:

[code language=”csharp”]

async void Button_Click(object sender, RoutedEventArgs e)

{

StorageFile ssmlFile = await StorageFile.GetFileFromApplicationUriAsync(

new Uri("ms-appx:///speech.xml"));

await this.SpeakSsmlFileAsync(

ssmlFile, this.uiMediaElement);

}

[/code]

If we use these opening lines of ‘Macbeth’ as an example piece of SSML, then below is a simple way of marking up that text:

[code language=”xml”]

<?xml version=’1.0′ encoding=’utf-8′?>

<speak

version="1.0"

xmlns="http://www.w3.org/2001/10/synthesis"

xml:lang="en-US">

When shall we three meet again in thunder, lightning, or in rain?

When the hurlyburly’s done

When the battle’s lost and won

That will be ere set of sun

Where the place?

Upon the heath

There to meet with Macbeth

</speak>

[/code]

But we can drive quite different output from the synthesizer if we take some control in our SSML and add some pauses, emphasis, and speed settings:

[code language=”xml”]

<speak

version="1.0"

xmlns="http://www.w3.org/2001/10/synthesis"

xml:lang="en-US">

<p>

<s>

When shall we three meet again <break/> <prosody rate="slow">

in <emphasis level="moderate">thunder,</emphasis><break time="200ms"/>lightning,<emphasis level="reduced">

<break time="200ms"/>or in rain?

</emphasis>

</prosody>

</s>

<s>

When the <emphasis level="strong">hurlyburly’s</emphasis> done

</s>

<s>

<break time="500ms"/>When the battle’s <emphasis level="moderate">lost</emphasis> and won

</s>

<s>

<break time="500ms"/>That will be ere <break time="200ms"/>set of sun

</s>

</p>

<p>

<s>

<break time="500ms"/>Where the place?<break time="250ms"/>

</s>

<s>

<emphasis level="reduced">Upon the heath<break time="1s"/></emphasis>

</s>

<s>

There to meet <break time="500ms"/>with <emphasis level="strong">Macbeth</emphasis>

</s>

</p>

</speak>

[/code]

Taking more control of recognition

Continuing on this theme of ‘taking control,’ let’s now return to the SpeechRecognizer that was the subject of the previous article and see how we can apply a little more control there.

As we saw previously, the recognizer always constrains the speech that it is listening for by maintaining a list of SpeechRecognizer.Constraints of different types, which we’ll work through in the following sections.

Recognition with dictionaries and hints

If you do not explicitly add constraints to your SpeechRecognizer, it will default to use a SpeechRecognitionTopicConstraint, which you can customize to be one of the options:

- Web Search

- Dictation

- Form Filling

You can further tailor it by providing hints.

It’s important to note that (1) constraining speech this way requires the user to opt in to the ‘Get to know me’ option explained here and (2) that recognition is performed by a remote web service, which means that there are potential implications around privacy, performance, and connectivity.

As an example, if you wanted to ask your user for a telephone number, then that’s easily done:

[code language=”csharp”]

string phoneNumber = string.Empty;

using (SpeechRecognizer recognizer = new SpeechRecognizer())

{

recognizer.Constraints.Add(

new SpeechRecognitionTopicConstraint(

SpeechRecognitionScenario.FormFilling, "Phone"));

await recognizer.CompileConstraintsAsync();

SpeechRecognitionResult result = await recognizer.RecognizeAsync();

if (result.Status == SpeechRecognitionResultStatus.Success)

{

phoneNumber = result.Text;

}

}

[/code]

This snippet did a very decent job of recognizing my own phone numbers whether spoken with or without country codes.

Recognizing Lists of Words or Commands

Another area where the recognizer works really efficiently is when it is guided to listen purely for a specific list of words or commands. We can replace the SpeechRecognitionTopicConstraint in the previous snippet with a SpeechRecognitionListConstraint, as per the snippet below:

[code language=”csharp”]

recognizer.Constraints.Add(

new SpeechRecognitionListConstraint(

new string[] { "left", "right", "up", "down" }));

[/code]

When we do so, the recognizer becomes very talented at picking up the words in question and we can combine multiple lists:

[code language=”csharp”]

recognizer.Constraints.Add(

new SpeechRecognitionListConstraint(

new string[] { "left", "right", "up", "down" }, "tag1"));

recognizer.Constraints.Add(

new SpeechRecognitionListConstraint(

new string[] { "over", "under", "behind", "in front" }, "tag2"));

[/code]

This might make sense for ‘command’-based scenarios, where voice shortcuts can supplement existing mechanisms of interaction.

Note that in the snippet above, the two constraints have been tagged – the SpeechRecognitionResult.Constraint can be checked after recognition and the Tag used to identify what has been recognized.

One example of this might be to use “play/pause/next/previous/louder/quieter” commands for a media player, while another might be to control a remote camera with “zoom in/zoom out/shoot” commands.

It’s possible to use this type of technique to implement a state machine whereby your app can drive the user through a sequence of speech interactions, selectively enabling and disabling the recognition of particular words or phrases based on the user’s state.

This is attractive in that it can be very dynamic, but it can also get complex. This is where a custom grammar can step in and make life easier.

Grammar-based recognition

Grammar based recognition is usually based on a grammar that it static but which might contain many options. The grammars understood by the SpeechRecognitionGrammarFileConstraint are Speech Recognition Grammar Syntax (SRGS) grammars V1.0, as specified by the W3C.

That’s a big specification to plough through. It may be easier to look at some examples.

If we have the function below, which opens up a grammar.xml file embedded in the application and uses it to recognize speech, what then becomes interesting is the content of the grammar file and the way in which the recognition results can be interpreted, post-recognition, to replace the “Process the results…” comment:

[code language=”csharp”]

using (SpeechRecognizer recognizer = new SpeechRecognizer())

{

StorageFile grammarFile =

await StorageFile.GetFileFromApplicationUriAsync(

new Uri("ms-appx:///grammar.xml"));

recognizer.Constraints.Add(

new SpeechRecognitionGrammarFileConstraint(grammarFile));

await recognizer.CompileConstraintsAsync();

SpeechRecognitionResult result = await recognizer.RecognizeAsync();

if (result.Status == SpeechRecognitionResultStatus.Success)

{

// Process the results…

}

}

[/code]

Let’s look at a couple of examples around a scenario of ordering some snacks.

Example 1: A simple choice

The following simple grammar file defines a root for the grammar to be rule-named “foodItem,” which defines a choice between pizzas and burgers:

[code language=”xml”]

<grammar

version="1.0"

mode="voice"

xml:lang="en-US"

tag-format="semantics/1.0"

xmlns="http://www.w3.org/2001/06/grammar"

root="foodItem">

<rule id="foodItem">

<one-of>

<item>

<tag>out.foodType="pizza";</tag>

pizza

</item>

<item>

<tag>out.foodType="burger";</tag>

burger

</item>

</one-of>

</rule>

</grammar>

[/code]

The following snippet can then be used to inspect the SpeechRecognitionResult.SemanticInterpretation properties to interpret the results, and the SpeechRecognitionResult.RulePath can identify which rule steps have been followed, although there is only one in this example:

[code language=”csharp”]

SpeechRecognitionResult result = await recognizer.RecognizeAsync();

if (result.Status == SpeechRecognitionResultStatus.Success)

{

// This will be "foodItem", the only rule involved in parsing here

string ruleId = result.RulePath[0];

// This will be "pizza" or "burger"

string foodType = result.SemanticInterpretation.Properties["foodType"].Single();

}

[/code]

Example 2: Expanding the options

Let’s expand out this grammar to include more than just a single word and make it into a more realistic example of ordering food and a drink:

[code language=”csharp”]

<?xml version="1.0" encoding="utf-8" ?>

<grammar

version="1.0"

mode="voice"

xml:lang="en-US"

tag-format="semantics/1.0"

xmlns="http://www.w3.org/2001/06/grammar"

root="order">

<rule id="order">

<item repeat="0-1">Please</item>

<item repeat="0-1">Can</item>

<item>I</item>

<item repeat="0-1">

<one-of>

<item>would like to</item>

<item>want to</item>

<item>need to</item>

</one-of>

</item>

<item repeat="0-1">

<one-of>

<item>order</item>

<item>get</item>

<item>buy</item>

</one-of>

</item>

<item repeat="0-1">a</item>

<ruleref uri="#foodItem"/>

<tag>out.foodType=rules.latest();</tag>

<ruleref uri="#drinkItem"/>

<tag>out.drinkType=rules.latest();</tag>

<item repeat="0-1">please</item>

</rule>

<rule id="drinkItem">

<item repeat="0-1">

<one-of>

<item>and</item>

<item>with</item>

</one-of>

a

<ruleref uri="#drinkChoice"/>

<tag>out=rules.latest();</tag>

</item>

</rule>

<rule id="drinkChoice">

<one-of>

<item>cola</item>

<item>lemonade</item>

<item>orange juice</item>

</one-of>

</rule>

<rule id="foodItem">

<one-of>

<item>

<tag>out="pizza";</tag>

<one-of>

<item>pizza</item>

<item>pie</item>

<item>slice</item>

</one-of>

</item>

<item>

<tag>out="burger";</tag>

<one-of>

<item>burger</item>

<item>hamburger</item>

<item>beef burger</item>

<item>quarter pounder</item>

<item>cheeseburger</item>

</one-of>

</item>

</one-of>

</rule>

</grammar>

[/code]

This grammar has a root rule called “order” which references other rules named “foodItem,” “drinkItem,” and “drinkChoice,” which themselves present various items that are either required or optional and which break down into a number of choices. It is meant to allow for phrases such as the following:

- “I would like to order a hamburger please”

- “I need to buy a pie”

- “I want a cheeseburger and a cola”

- “Please can I buy a pizza and a lemonade”

It allows for quite a few other combinations, as well, but the process of parsing the semantic intention is still very simple and can be covered by this snippet of code for processing the results:

[code language=”Csharp”]

SpeechRecognitionResult result = await recognizer.RecognizeAsync();

if (result.Status == SpeechRecognitionResultStatus.Success)

{

IReadOnlyDictionary<string, IReadOnlyList<string>> properties =

result.SemanticInterpretation.Properties;

// We could also examine the RulePath property to see which rules have

// fired.

if (properties.ContainsKey("foodType"))

{

// this will be "burger" or "pizza"

string foodType = properties["foodType"].First();

}

if (properties.ContainsKey("drinkType"))

{

// this will be "cola", "lemonade", "orange juice"

string drinkType = properties["drinkType"].First();

}

}

[/code]

Hopefully, you get an impression of the sort of power and flexibility that a grammar can give to speech recognition and how it goes beyond what you might want to code yourself by manipulating word lists.

Voice-command-file-based recognition

For completeness, the last way in which the SpeechRecognizer can be constrained is to make use of a SpeechRecognitionVoiceCommandDefinitionConstraint—this is for scenarios where your app is activated by Cortana with a VoiceCommandActivatedEventArgs argument containing a Result property, which will carry with it a Constraint of this type for interrogation. This is specific for those scenarios and not something that you would construct in your own code.

Wrapping up

We’ve covered quite a lot of ground in this and the previous post about speech recognition and speech synthesis all under the APIs provided by the UWP.

In the next post, we’ll look at some speech-focused APIs available to us in the cloud and see where they overlap and extend the capabilities that we’ve seen so far.

In the meantime, don’t forget to check out the links below:

Written by Mike Taulty (@mtaulty), Developer Evangelist, Microsoft DX (UK)