DirectML: Accelerating AI on Windows, now with NPUs

We are thrilled to announce our collaboration with Intel®, one of our key partners, to bring the first Neural Processing Unit (NPU) powered by DirectML on Windows. AI is transforming the world, driving innovation and creating value across industries. NPUs are critical components in enabling amazing AI experiences for developers and consumers alike.

An NPU is a processor built for enabling machine learning (ML) workloads that are computationally intensive, do not require graphics interactions, and provide efficient power consumption. These new devices will revolutionize how AI transforms our day-to-day experiences. We are excited to share that early next year we will release the DirectML support for Intel® Core™ Ultra processors with Intel® AI Boost, the new integrated NPU.

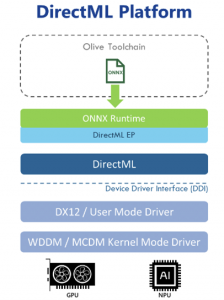

DirectML is a low-level, hardware abstracted API that provides direct access to hardware capabilities of modern devices, such as GPUs, for ML workloads. It is part of the DirectX family—the Windows graphics and gaming platform—and is designed to integrate with other DirectX components, such as DirectX12. DirectML integrates with popular ML and tooling frameworks, such as the cross-platform inference engine, the ONNX Runtime and Olive, the Windows optimization tooling framework for ML models, thus simplifying the development and deployment of AI experiences across the Windows ecosystem.

By extending the hardware acceleration capabilities to include NPU support in DirectML, we are opening new possibilities for AI on Windows. DirectML with NPU support will be in developer preview in early 2024, along with the latest ONNX Runtime release, with broadening support over 2024. Stay tuned for more announcements with key partners, expanded capabilities and how to use DirectML for NPUs.

We can’t wait to see the amazing AI experiences you will create on Windows, with DirectML on Intel® Core™ Ultra processors.