Microsoft Expressive Pixels: a platform for creativity, inclusion and innovation

You’re sitting at your PC, at home, working remotely. You’ve got a partner who is doing the same.

Without requiring you to even turn around, an LED display visible to anyone near you lights up an emoji: a stop sign.

Now your household knows you’re busy for the moment, without you having to say a word.

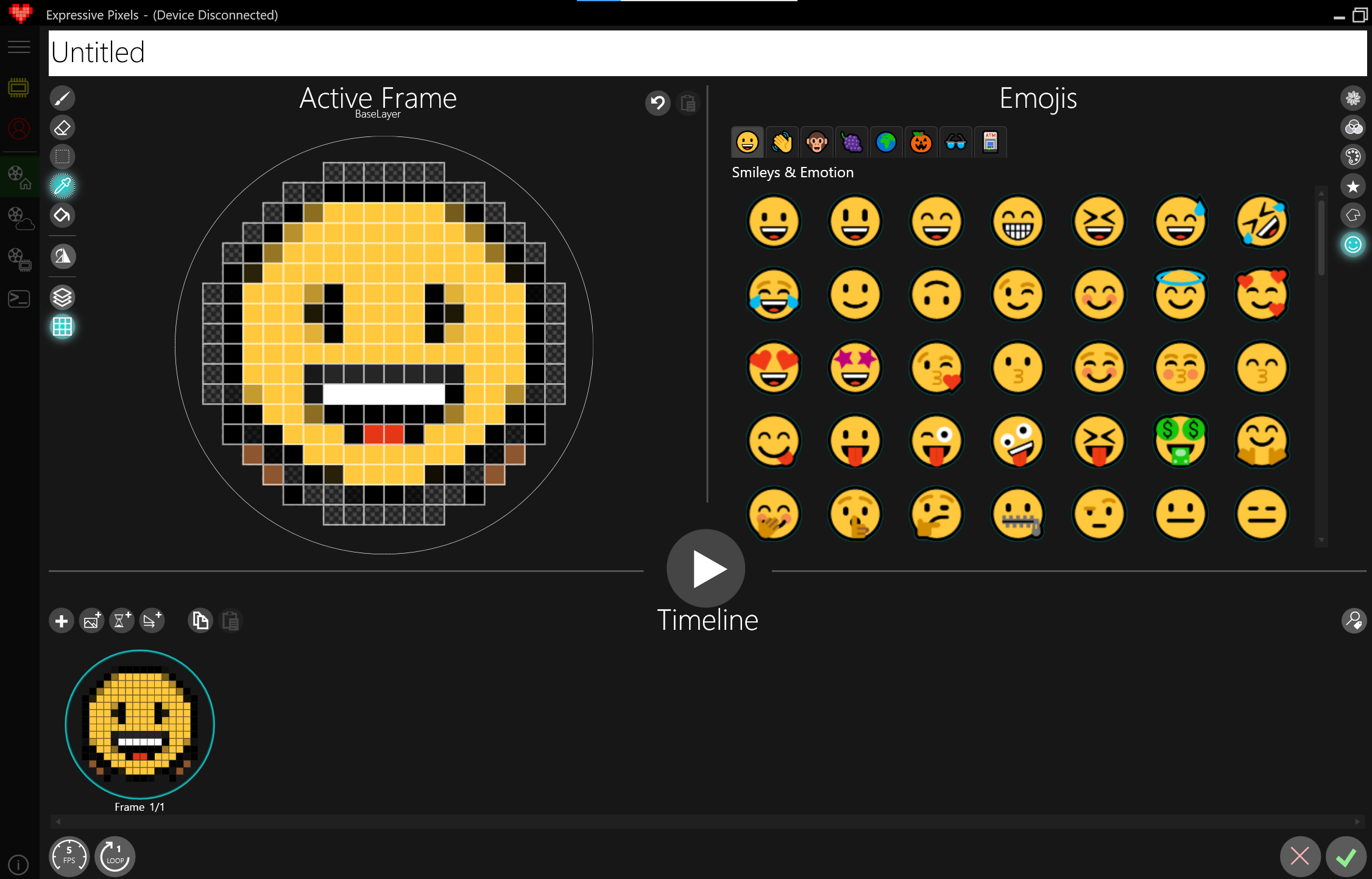

This is one of many uses for Expressive Pixels, a Windows 10 authoring platform focused on animated visualizations now available for free in the Microsoft Store. In addition to the app, it has a common set of maker firmware source code that enables users with an LED display device to communicate using visuals or emoji.

Taken altogether, it can expand non-verbal communication, drive creative applications for developers to use via open source APIs and lower the barrier to entry for aspiring programmers, designers and researchers.

Expressive Pixels also acts as a springboard for amateur makers or more computer savvy programmers, allowing them to build new creations without having to figure out how to consume and program animations and images on small devices, by providing a library that does the animation.

No matter where you are working, learning or connecting, Expressive Pixels delivers an animated way to personalize your space and augment your presence, in the same way pictures and knickknacks do.

“We focus so much on basic day to day functions, but if you look at what makes us human, there are emotions. We want to express and connect with each other,” says Bernice You, a general manager of strategy and projects in Small, Medium & Corporate Business at Microsoft who is on the Expressive Pixels project team and also instrumental in the release of the Eyes First games in 2019, which incorporate Windows 10 Eye Control, a key accessibility feature for people with speech and mobility disabilities. “Being productive is great, but we want to be humans too. It’s quite an untouched space.”

The Expressive Pixels project incorporates years of a deep and meaningful collaboration between the Enable Group at Microsoft and people with severe speech and mobility disabilities to try to understand their perspectives, needs and problems as they pertain to communication, creative expression, identity, and human connection with a focus on augmenting traditional AAC devices with novel experiences that serve as proxies for non-verbal communication, personal and creative expression, social cues and device status.

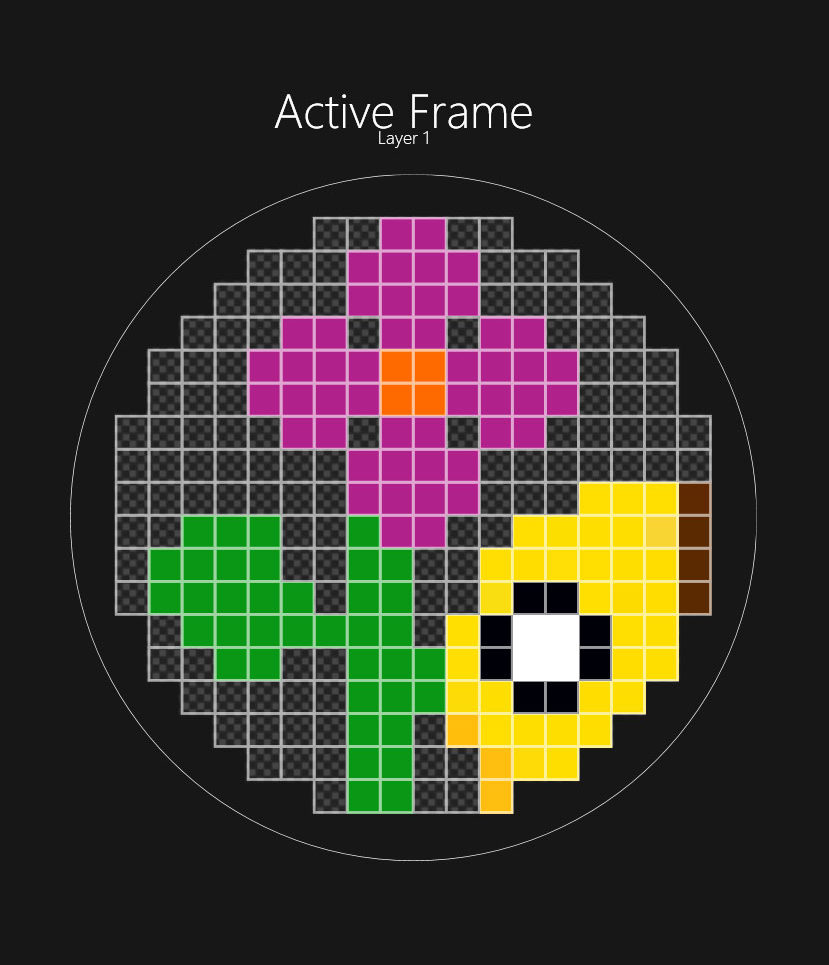

Expressive Pixels designs

“This can be both a serious thing and a fun and creative way of saying something about yourself,” says Harish Kulkarni, an engineering manager on the AI frameworks team within Microsoft’s Cloud and AI group, who was also part of the Enable team for several years and a guiding force in integrating Eye Control in Windows 10.

Along the way to this release, the team unlocked all kinds of serendipitous innovation, consistently tackling obstacles with an open and fresh perspective inspired by the value the technology could bring to people.

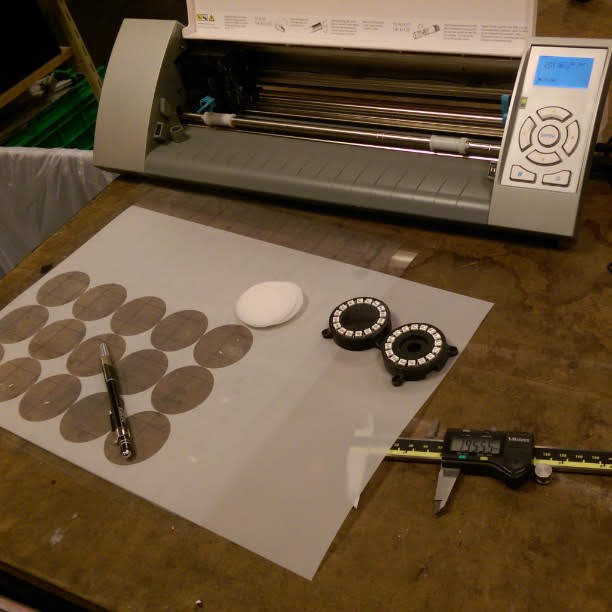

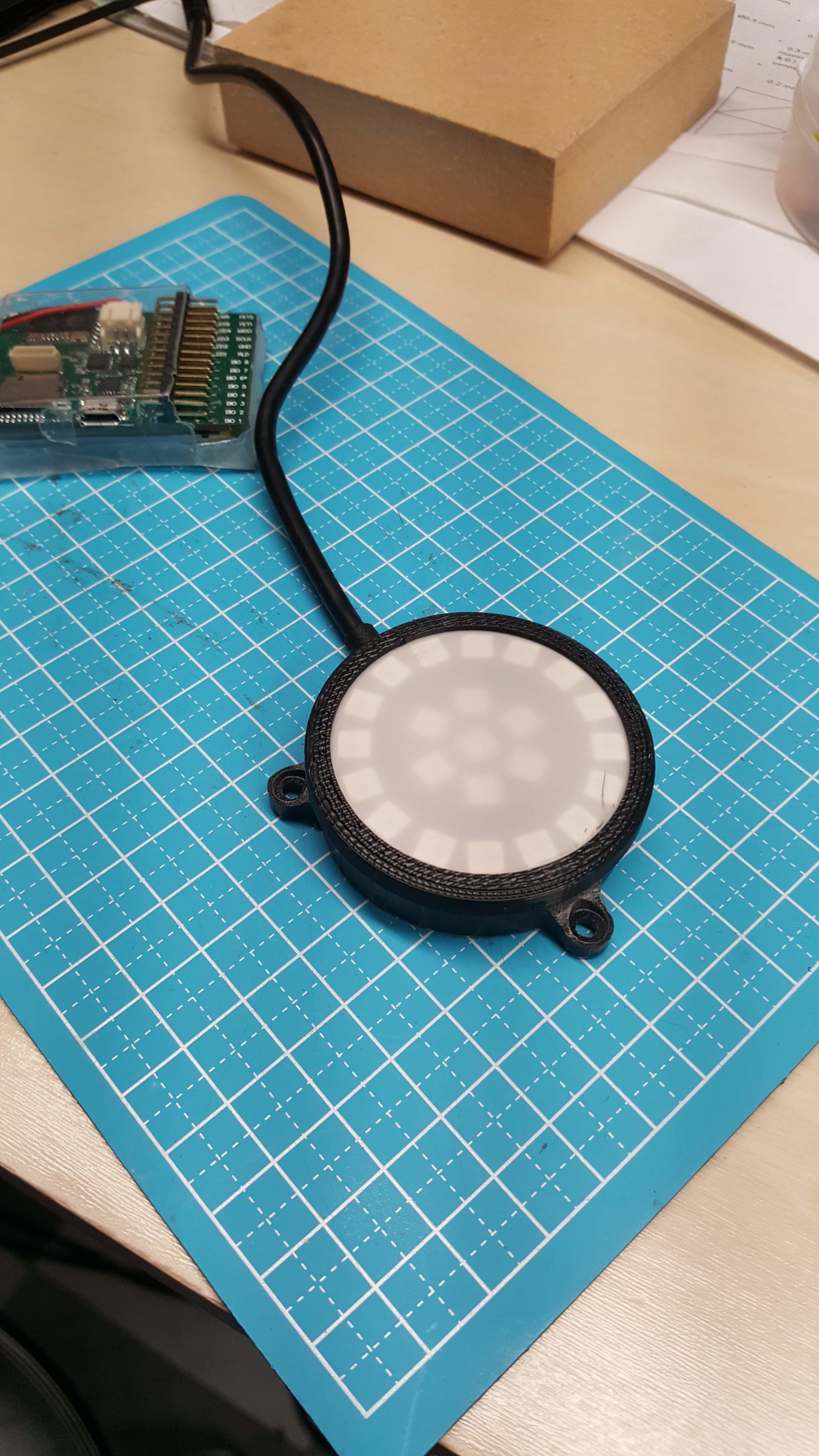

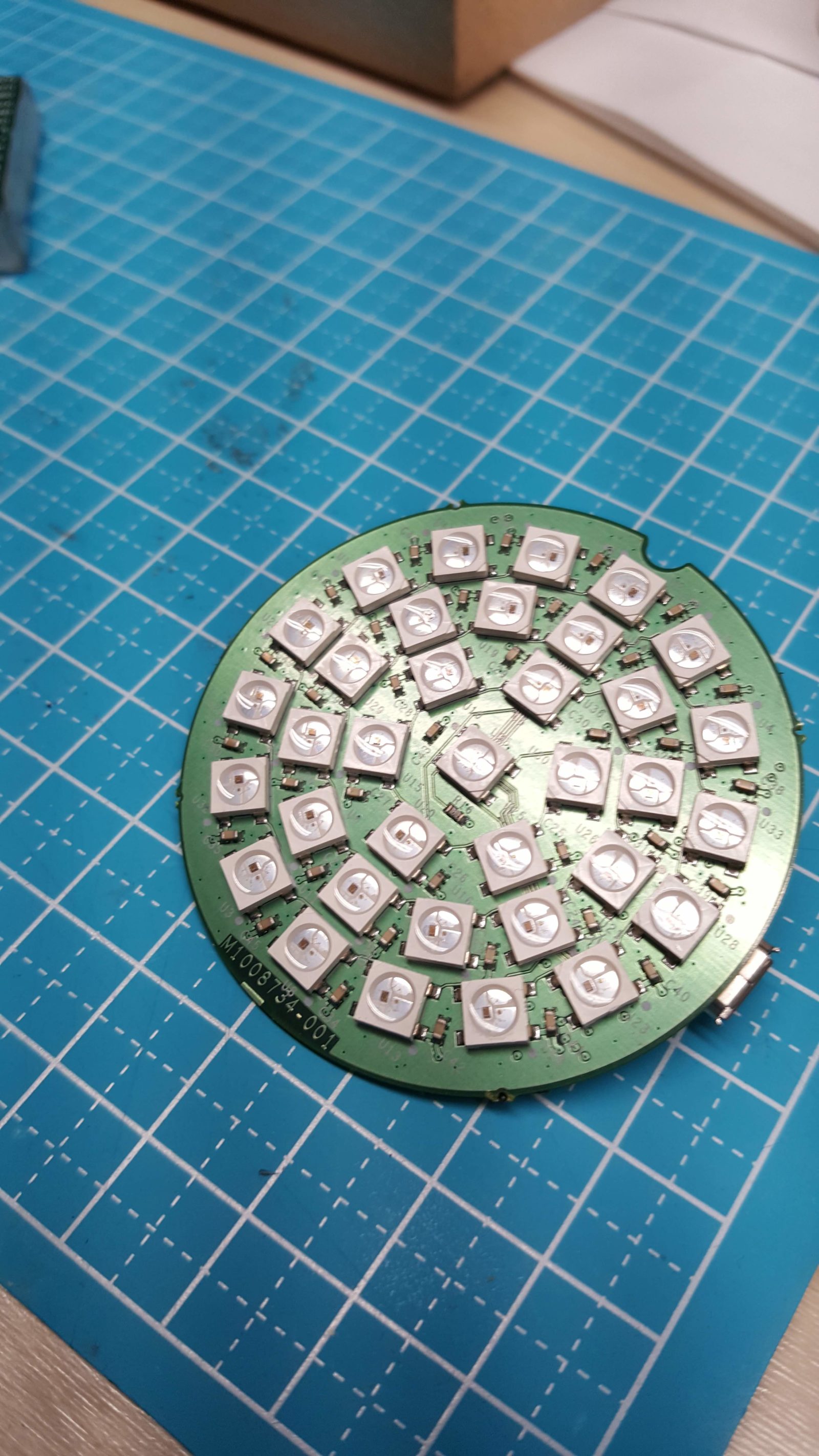

When they realized they needed a form factor that could easily be seen across a room and in a variety of lighting conditions, they turned to user-friendly LED displays, which are available off the shelf through third party producers such as Sparkfun, Adafruit and SiliconSquared Displays (the latter a custom-made an all-in-one device conceived and engineered to address resolution, connectivity, storage and animation abilities, made by the Expressive Pixel team’s sole engineer, Gavin Jancke). But you can also use Expressive Pixels without an LED display or other device, simply inserting animated GIFs into emails.

Then came another hurdle: developing software that would make it easy to render animations on the displays. Again, Jancke took up the challenge and engineered the firmware. Together the firmware plus the authoring app combine to form a platform that can be run on a multitude of devices. With the firmware, makers can use specific aspects or expand on it. Creators can share their original animations in a Cloud Gallery in the app.

Jancke’s been instrumental in developing these pivotal elements for Expressive Pixels – and all in his spare time, away from his day job.

“Communication is not just what your text says or what you're speaking. There's a whole lot of nonverbal communication happening, including knowing when it’s your turn to speak.”- Harish Kulkarni

SiliconSquared Display

“Generally when I get involved in something, I make a royal meal out of it,” jokes Jancke, a general manager of engineering within Microsoft Research. But reflecting more seriously, he adds, “My passion is serving underserved populations or communities, to give them capabilities that are out of their reach because of their technical ability, but within my technical ability.”

Jancke is a jack of all trades who gained new skills – including electrical engineering – over the three years he invested in the project. Along the way he created a new device category with a higher resolution display of RGB LEDs than had previously been available. He also figured out how to untether the experience for maximum mobility, incorporating Bluetooth technology and different mechanisms to trigger animations such as with switched or MIDI music signals.

“What’s really interesting is the unintended consequences of pushing yourself out of your comfort zone, learning something new,” he says. “You gain surprising insights and abilities.”

The road to the release of Expressive Pixels began more than five years ago with the Enable Group’s interest in improving AAC (Augmentative and Alternate Communication) devices, which are usually cost prohibitive because they’re part of the medical market.

Principle design researcher Ann Paradiso led the efforts, guided by a desire to apply her energy in service to others. She had worked on Jancke’s team in Microsoft Research before moving over to the Enable Group, so it was natural for him to help her as this project gained momentum.

Early on, they worked with former NFL player Steve Gleason, who helped inspire and drive development of the research that would lead to their project, after he was diagnosed with ALS (Amyotrophic Lateral Sclerosis). Even though he’s considered adept in using eye tracking technology, he still had trouble communicating to others when he had technical problems, as his eyes were focused on his screen and not on the person who was trying to converse with him.

“If you spend any amount of time with anybody using a speech device with their eyes only, you’ll notice that the pace of the conversation slows down drastically from conversational speech. AAC users frequently see a 12-25 fold decrease in word rate, even with improvements in prediction and eye gaze accuracy,” Paradiso says. “So what we see a lot is a pattern where conversation partners either don’t learn to sit with the extra silence or they are unable to see that a response is being composed, so the conversation moves on and the person with the speech-related disability gets left behind.”

That led to user-centered research alongside people with ALS (PALS) and their families. Paradiso and her collaborators met regularly with them, accompanying them on clinical appointments and observing their interactions with neurologists, speech pathologists, physical therapists and more. The team would go to PALS’ homes and invite them into the team’s labs at Microsoft. The trust and relationships that came from this enabled the team to really get to know the whole ecosystem around disabilities, their support systems, families, equipment, what worked, what didn’t.

“One of the things we realized pretty quickly was that there could be high value in optimizing LED displays for emoji-based communication.”- Ann Paradiso

“Emojis are already ubiquitous in digital communication platforms, including text-based messaging, email and social media. They require fewer ‘clicks’ to express intent, add context, or set tone. A single emoji can dramatically alter or enhance the interpretation of a message. We think they can be leveraged as a supplemental expressive proxy for people who may not have access to speech or the muscles that drive facial expression,” Paradiso says. “Our PALS collaborators have been some of the funniest, most thoughtful and creative people I’ve known, but their expressive range can be limited by the constraints of disability as well as today’s speech devices and their underlying technologies. We know that people want to express a lot more with their AAC devices than basic transactional communication. We wanted to create something that would help people stay more actively engaged in conversations, be visible in low light and from a distance, and provide another avenue for unique expression, playfulness and connection.”

The team looked at different types of secondary displays, but they kept coming back to LED displays, for several reasons: they’re low-cost, they work reasonably well and “they have kind of a cool factor.” The team’s collaborators in PALS also made it clear they didn’t want to use anything that could have unintended negative social consequences for the users.

“Watching somebody living with ALS and being able to empower them to do something that they previously had given up all hope of being able to do is enough to inspire you to want to do more,” says Dwayne Lamb, a developer who specializes in User Experience and User Interface creation who joined the Enable Group in early 2017. “Most of the time when you’re trying to communicate with somebody who can only use their eyes to communicate, they’re using their eyes to type into a keyboard on a device right in front of them, and although it’s not socially good etiquette, you commonly find yourself kind of looking over their shoulder to try and see what they’re typing.”

Expressive Pixels evolved in part from wanting to solve that problem.

And with Expressive Pixels, it’s possible to create animations on displays of many sizes, up to 64 x 64 pixels, says Christopher O’Dowd, who helped fill the hardware gaps on the project. He points out that LED displays are ubiquitous at Maker Faires, on houses during the holidays, etc. LED displays are so versatile, you often even find them on fabric (face masks, caps and backpacks, for instance) or banners.

One early way the team incorporated LED displays was through hands-free music, an award-winning SXSW project.

There, they used a custom midi-enabled, music synced LED array as a supplemental visualization to an eye controlled, physical drum rig designed for one of their collaborators, a Seattle area musician living with ALS. The project, which won the 2018 SXSW Interactive Innovation Award: Music and Audio Innovation, features a suite of novel eye-controlled applications for music performance, collaboration and composition.

“How does someone without access to speech or movement compose or perform music, command a stage, or connect with a live audience? What about collaborating with other musicians in rehearsed or improvisational scenarios? How can we lower the barriers to making school music programs more inclusive without ‘othering’ or minimizing the students with disabilities? These were some of our foundational questions,” Paradiso recalled. “We wanted to adapt our technology and designs to align with a person’s creative goals and real-life scenarios, rather than the way around.”

Lamb came up with the idea to add Musical Instrument Digital Interface (MIDI) capability, with the core idea to send out different signals to different instruments – an idea that would transfer to Expressive Pixels.

“Every time we worked toward a different goal, we’d push the platform further,” Paradiso says.

The Expressive Pixels platform is open to a broad range of creators. In addition to the maker community, students can design for others and learn JavaScript through Microsoft MakeCode. Generally MakeCode devices have just a very small handful of LEDs, Jancke says, so bringing true display capability opens up a large range of new abilities for students to create, experiment and program with using something like the SiliconSquared Displays attached to a MakeCode gadget.

“Expressive Pixels provides an easy way for students to integrate animations produced from the authoring app into their MakeCode programing and hardware creations with minimal fuss,” Jancke says.

The app itself would know what animations are stored on the device, so different commands would trigger the specific response on it.

Through this and other Enable projects, Paradiso found herself rediscovering her passion for the company, after nearly 20 years with it.

“To see so many people coming together, many on their personal time, to work on behalf of something bigger than us individually is inspired and aligned with our company’s values and mission, and that makes me happy and hopeful for a more inclusive, creative future for everyone,” Paradiso says.

Go to the Expressive Pixels site to find out more.