The Language APIs attack the problem of meaning from many different angles. There isn’t enough time at the moment to go into all of them in depth, but here’s a quick overview so know what is possible when using the six Cognitive Services Language APIs …

- Bing Spell Check cleans up not only misspellings, but also recognizes slang, understands homonyms and fixes bad word breaks.

- Microsoft Translator API, built on Deep Neural Networks, can do speech translation for 9 supported languages and text translations between 60 languages.

- Web Language Model API uses a large reservoir of data about language usage on the web to make predictions like: how to insert word breaks in a run-on sentence (or a hashtag or URL), the likelihood that a sequence of words would appear together and the word most likely to follow after a given word sequence (sentence completion).

- Linguistic Analysis basically parses text for you into sentences, then into parts-of-speech (nouns, verbs, adverbs, etc.), and finally into phrases (meaningful groupings of words such as prepositional phrases, relative clauses, subordinate clauses).

- Text Analytics will sift through a block of text to determine the language it is written in (it recognizes 160), key phrases and overall sentiment (pure negative is 0 and absolutely positive is 1).

- Language Understanding Intelligent Service (LUIS) provides a quick and easy way to determine what your users want by parsing sentences for entities (nouns) and intents (verbs), which can then be passed to appropriate services for fulfillment. For instance, “I want to hear a little night music” could open up a preferred music streaming service and commence playing Mozart. LUIS can be used with bots and speech-driven applications.

That’s the mile-high overview. Let’s now take a closer look at the last two Language APIs in this list.

Digging into Text Analytics

The Cognitive Services Text Analytics API is designed to do certain things very well, like evaluating web page reviews and comments. Many possibilities are opened up by this simple scenario. For instance, you could use this basic functionality to evaluate opening passages of famous novels. The REST interface is straight-forward. You pass a block of text to the service and request that Text Analytics return either the key phrases, the language the block of text is written in, or a sentiment score from 0 to 1 indicating whether the passage is negative in tone or positive.

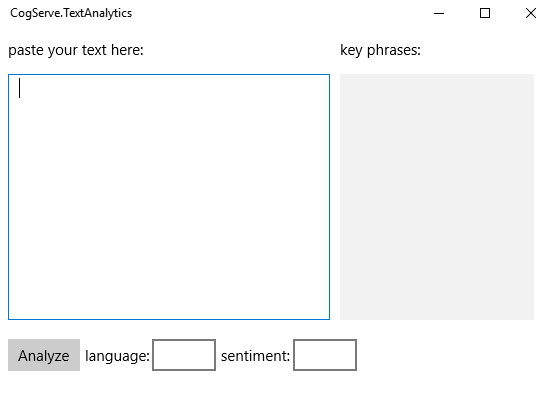

The user interface for this app is going to be pretty simple. You want a TextBox for the text you need to have analyzed, a ListBox to hold the key phrases and two TextBlocks to display the language and sentiment score. And, of course, you need a Button to fire the whole thing off with a call to the Text Analytics service endpoint.

When the Analyze button is clicked, the app will use the HttpClient class to build a REST call to the service and retrieve, one at a time, the key phrases, the language and the sentiment. The sample code below uses a helper method, CallEndpoint, to construct the request. You’ll want to have a good JSON deserializer like Newtonsoft’s Json.NET (which is available as a NuGet package) to make it easier to parse the returned messages. Also, be sure to request your own subscription key to use Text Analytics.

[code lang=”csharp”]

readonly string _subscriptionKey = "xxxxxx1a89554dd493177b8f64xxxxxx";

readonly string _baseUrl = "https://westus.api.cognitive.microsoft.com/";

static async Task<String> CallEndpoint(HttpClient client, string uri, byte[] byteData)

{

using (var content = new ByteArrayContent(byteData))

{

content.Headers.ContentType = new MediaTypeHeaderValue("application/json");

var response = await client.PostAsync(uri, content);

return await response.Content.ReadAsStringAsync();

}

}

private async void btnAnalyze_Click(object sender, RoutedEventArgs e)

{

using (var client = new HttpClient())

{

client.BaseAddress = new Uri(_baseUrl);

// Request headers

client.DefaultRequestHeaders.Add("Ocp-Apim-Subscription-Key", _subscriptionKey);

client.DefaultRequestHeaders.Accept.Add(new MediaTypeWithQualityHeaderValue("application/json"));

// Build request body

string textToAnalyze = myTextBox.Text;

byte[] byteData = Encoding.UTF8.GetBytes("{"documents":[" +

"{"id":"1","text":"" + textToAnalyze + ""},]}");

// Detect key phrases:

var uri = "text/analytics/v2.0/keyPhrases";

var response = await CallEndpoint(client, uri, byteData);

var keyPhrases = JsonConvert.DeserializeObject<KeyPhrases>(response);

// Detect just one language:

var queryString = "numberOfLanguagesToDetect=1";

uri = "text/analytics/v2.0/languages?" + queryString;

response = await CallEndpoint(client, uri, byteData);

var detectedLanguages = JsonConvert.DeserializeObject<LanguageArray>(response);

// Detect sentiment:

uri = "text/analytics/v2.0/sentiment";

response = await CallEndpoint(client, uri, byteData);

var sentiment = JsonConvert.DeserializeObject<Sentiments>(response);

DisplayReturnValues(keyPhrases, detectedLanguages, sentiment);

}

}

[/code]

Remarkably, this is all the code you really need to access the rich functionality of the Text Analytics API. The only things left out are the class definitions for KeyPhrases, LanguageArray and Sentiment to economize on space, and you should be able to reconstruct these yourself from the returned JSON strings.

According to Text Analytics, the opening to James Joyce’s Ulysses (0.93 sentiment) is much more positive than the opening to Charles Dickens’ A Tale of Two Cities (0.67). You don’t have to use this just for evaluating the mood of famous opening passages, however. You could also paste in posts from your favorite social network. In fact, you can search for social media related to a certain topic of interest and find out what the average sentiment is regarding it.

You can probably see where we’re going with this. If you are running a social media campaign, you could use Text Analytics to do a qualitative evaluation of the campaign based on how the audience responds. You could even run tests to see if changes to the campaign will cause the audience’s mood to shift.

Using LUIS to figure out what your user wants

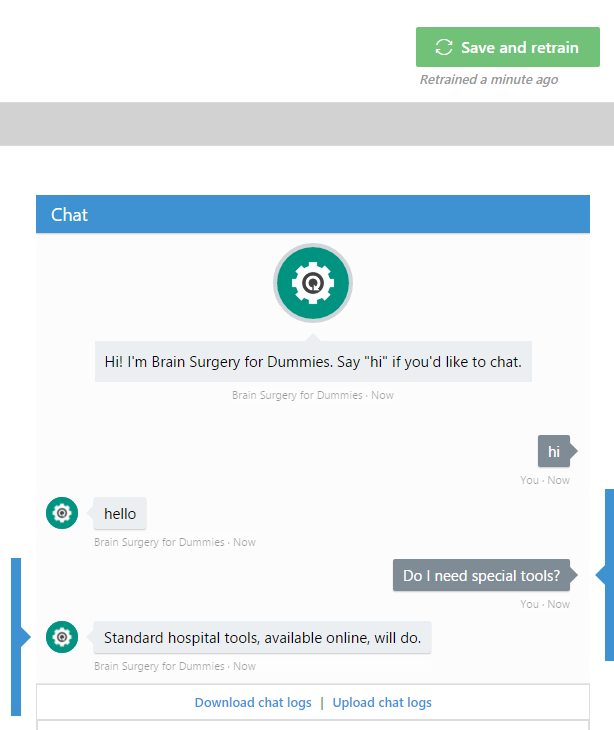

LUIS lets you build language intelligence into your speech driven apps. Based on things that your user might say, LUIS attempts to parse its statements to figure out the Intents behind the statement (what your user wants to do) and also the Entities involved in your user’s desire. For instance, if your app is for making travel arrangements, the Intents you are interested in are booking and cancellation, while the Entities you care about are travel dates and number of passengers. For a music playing app, the Intents you should be interested in are playing and pausing while the Entities you care about are particular songs.

In order to use LUIS, you first need to sign in through the LUIS website and either use a Cortana pre-built app or build a new app of your own. The pre-built apps are pretty extensive and for a simple language understanding task like evaluating the phrase “Play me some Mozart,” it has no problem identifying both the intent and the entity involved.

[code lang=”csharp”]

{

"query": "play me some mozart",

"intents": [

{

"intent": "builtin.intent.ondevice.play_music"

}

],

"entities": [

{

"entity": "mozart",

"type": "builtin.ondevice.music_artist_name"

}

]

}

[/code]

If your app does music streaming, this quick call to Cognitive Services provides all the information you need to fulfill your user’s wish. A full list of pre-built applications is available in the LUIS.ai documentation. To learn more about building applications with custom Intents and Entities, follow through these training videos.

Wrapping Up

Cognitive Services provides some remarkable machine learning-based tools to help you determine meaning and intent based on human utterances, whether these utterances come from an app user talking into his or her device or someone providing feedback on social media. The following links will help you discover more about the capabilities the Cognitive Services Language APIs put at your disposal.